How to Automate Sales Prospecting & Outreach with AI Agents

Ever since LLMs like ChatGPT have hit the scene, they've been all the rage and have fundamentally changed how many things are done—sales prospecting and outreach included.

But the ultimate question is: how can you practically implement LLMs to improve your workflow, rather than just being something fun to play with?

You know, at what point does "AI" become something like we originally imagined it being—something that can complete entire tasks for you?

For many things, AI isn't quite there yet. But for sales prospecting and outreach, quite a few things can be automated, or at least heavily assisted by AI agents right now.

That's what this article will be all about.

First things first:

What is an AI agent?

Good question. It'll have a slightly different answer depending on who you ask.

For example, IBM describes an AI agent as:

a system or program that autonomously performs tasks for a user or another system by designing its workflow and utilizing available tools.

On the other hand, Amazon defines an AI agent as:

software that interacts with its environment, collects data, and uses it to perform self-determined tasks to meet goals set by humans.

Then TechCrunch describes an AI agent as:

AI-driven software that performs tasks traditionally done by humans, potentially crossing multiple systems and handling various jobs beyond just answering questions.

Basically, at its core, an AI agent is a software program designed to perform tasks autonomously. These agents can process information, make decisions, and execute actions based on predefined rules and learning from data.

How can AI agents benefit sales prospecting?

For sales prospecting, an AI agent can accomplish three main things:

- Lead identification and ranking: An AI agent can search for leads that fit your Ideal Customer Profile (ICP), identify and rank them, and prospect on your behalf.

- Automated outreach: The agent can automatically reach out to ideal prospects and write a series of outreach messages tailored to their interests and needs.

- Inbox management: The AI agent can monitor your email inbox, automatically replying to interested leads and pushing them to book a call with you directly.

For B2B sales, this means you can use AI agents to handle the majority of the sales workflow. All you need to do is show up for sales demos and close the deal.

The first step to any AI agent is data

To get an LLM to do anything useful, it needs an appropriate amount of data for the task at hand.

For example, if you're automating sales prospecting, the AI agent would need access to relevant prospecting data, such as email addresses, company information, and so on.

Incorporating dark web monitoring can help ensure the security of this data by identifying and mitigating potential threats from the dark web, thereby protecting sensitive information from being compromised.

How to programmatically access B2B data

Luckily since you're on the Proxycurl blog, you'll have programmatic access to just about all of the B2B data you could ever possibly need.

We have millions of data points on people, jobs, companies, and more, and we provide acccess to it all via a REST API.

In this case, two very useful endpoints would be our Person Search Endpoint, and our Personal Email Lookup Endpoint.

Searching for prospects

So, for example, to search for prospects via our Person Search Endpoint, you could do so via a simple cURL command:

curl \

-G \

-H "Authorization: Bearer ${YOUR_API_KEY}" \

'https://nubela.co/proxycurl/api/v2/search/person' \

--data-urlencode 'country=US' \

--data-urlencode 'current_role_title=founder' \

--data-urlencode 'industries=Computer Software' \

--data-urlencode 'page_size=10' \

--data-urlencode 'enrich_profiles=enrich'

That would return you 10 "enriched" (more information) founders working in the computer software industry.

You'll get 100 trial credits if you sign up with a work email, 10 credits with a personal email.

Then, if you found a prospect of interest that was returned by our Person Search Endpoint and were interested in reaching out, you could use our Personal Email Lookup Endpoint to get their email.

Enriching prospects with emails

Here's how:

curl \

-G \

-H "Authorization: Bearer ${YOUR_API_KEY}" \

'https://nubela.co/proxycurl/api/contact-api/personal-email' \

--data-urlencode 'Professional Social Network_profile_url=https://www.professionalsocialnetwork.com/in/exampleprofile/'

That would take the given Professional Social Network URL from the Person Search Endpoint, and return you their personal email if available.

Generating large lead lists to act as a dataset for your AI agent

Now let's say you want to generate a large lead list to use as a dataset for your AI agent.

We could use a little bit of Python to help us accomplish this:

import requests

import os

import csv

import time

CSV_FILE_PATH = "./exported_data.csv"

API_KEY = "Your_API_KEY_Here"

# Function to search for people and handle pagination

def search_person(api_key, max_results):

url = "https://nubela.co/proxycurl/api/v2/search/person"

headers = {"Authorization": f"Bearer {api_key}"}

params = {

'country': 'US',

'current_role_title': 'founder',

'industries': 'Computer Software',

'page_size': '10',

'enrich_profiles': 'enrich'

}

results = []

try:

while len(results) < max_results:

response = requests.get(url, headers=headers, params=params)

if response.status_code == 429:

print("Rate limit hit, sleeping for a while...")

time.sleep(10)

continue

response.raise_for_status()

data = response.json()

if not data.get("results"):

print("No more results to fetch.")

break

results.extend(data.get("results", []))

print(f"Fetched {len(results)} results so far.")

if data.get("next_page") and len(results) < max_results:

url = data["next_page"]

else:

break

return results[:max_results]

except requests.exceptions.RequestException as e:

print(f"Error in search_person: {e}")

return []

# Lookup personal email function

def lookup_personal_email(api_key, Professional Social Network_url):

url = 'https://nubela.co/proxycurl/api/contact-api/personal-email'

headers = {'Authorization': f'Bearer {api_key}'}

params = {'Professional Social Network_profile_url': Professional Social Network_url}

try:

response = requests.get(url, headers=headers, params=params)

response.raise_for_status()

data = response.json()

return ', '.join(data.get('emails', [])) or 'N/A'

except requests.exceptions.RequestException as e:

print(f"Error fetching personal email for {Professional Social Network_url}: {e}")

return 'N/A'

# Create new CSV if needed

def create_new_csv(file_name):

with open(file_name, mode='w', newline='', encoding='utf-8') as file:

writer = csv.writer(file)

writer.writerow([

"First Name", "Last Name", "Professional Social Network URL", "Occupation", "Summary",

"Current Company", "Current Company Description", "Company URL",

"Experiences", "Personal Email"

])

print(f"New CSV file created: {file_name}")

# Export data to CSV with handling for missing profile data

def export_to_csv(data, file_name=CSV_FILE_PATH):

print(f"Starting export to CSV: {file_name}")

if not os.path.exists(file_name):

create_new_csv(file_name)

try:

with open(file_name, mode='a', newline='', encoding='utf-8') as file:

writer = csv.writer(file)

for person in data:

Professional Social Network_url = person.get("Professional Social Network_profile_url", "N/A")

profile = person.get("profile", {})

if not profile:

print(f"Profile missing for {Professional Social Network_url}, skipping...")

continue

first_name = profile.get("first_name", "N/A")

last_name = profile.get("last_name", "N/A")

occupation = profile.get("occupation", "N/A")

summary = profile.get("summary", "N/A")

experiences = profile.get("experiences", [])

experiences_list = []

current_company = "N/A"

current_company_description = "N/A"

company_url = "N/A"

if experiences and isinstance(experiences, list):

first_experience = experiences[0] if len(experiences) > 0 else {}

current_company = first_experience.get("company", "N/A")

current_company_description = first_experience.get("description", "N/A")

company_url = first_experience.get("company_Professional Social Network_profile_url", "N/A")

for exp in experiences:

company_name = exp.get("company", "N/A")

title = exp.get("title", "N/A")

description = exp.get("description", "N/A")

start_date = exp.get("starts_at", {}).get("year", "N/A") if exp.get("starts_at") else "N/A"

end_date = exp.get("ends_at", {}).get("year", "N/A") if exp.get("ends_at") else "N/A"

exp_string = f"Company: {company_name}, Title: {title}, Description: {description}, Start: {start_date}, End: {end_date}"

experiences_list.append(exp_string)

experiences_str = " | ".join(experiences_list)

else:

experiences_str = "N/A"

personal_email = lookup_personal_email(API_KEY, Professional Social Network_url)

writer.writerow([

first_name, last_name, Professional Social Network_url, occupation, summary,

current_company, current_company_description, company_url,

experiences_str, personal_email

])

print(f"Added row for {first_name} {last_name}")

print(f"Data successfully exported to {file_name}")

except Exception as e:

print(f"Error in export_to_csv: {e}")

# Start the process of fetching and exporting data

def start_process(max_results):

try:

search_results = search_person(API_KEY, max_results)

if not search_results:

print("No results found.")

return

export_to_csv(search_results)

except Exception as e:

print(f"Error in start_process: {e}")

if __name__ == '__main__':

start_process(max_results=500)

How to use the script

1. Make sure you have Python installed.

To run the script you would first need to have Python installed. An easy way to work with Python is to install an Integrated Development Environment (IDE) like PyCharm.

Also, install the requests module if you haven’t already using Python's package manager.

Just run the following command from your terminal or command prompt:

pip3 install requests

2. Replace Your_API_Key_Here with your actual Proxycurl API key in the script.

3. Execute the script:

python3 your_script_name.py

4. After running the above Python script, it would return you a .CSV list of 500 founders of computer software companies.

The data points it includes are:

- First name

- Last name

- Professional Social Network profile URL

- Occupation

- Summary (a brief overview of the individual's profile)

- Current company name

- Current company description

- Current company Professional Social Network URL

- Experiences (a summary of job experiences, including company names, job titles, descriptions, start and end dates)

- Personal email (if available)

For all prospects, and can return even more if you want to add more of the results available on our Person Search Endpoint, which you can view on our documentation here.

Note that this can consume quite a bit of credits, so you might want to review our pricing here first and lower the amount of results returned by the Python script by changing max_results.

Modifying the filtering for the script

You can adjust the search parameters in the params dictionary inside the search_person function.

For example, if you want to search for marketing managers in the UK instead of founders in the US, you can update the parameters like this:

params = {

'country': 'GB',

'current_role_title': 'marketing manager',

'industries': 'Marketing and Advertising',

'page_size': '10',

'enrich_profiles': 'enrich'

}

But that's far from it when it comes to filtering and search options. You can view the available search parameters here.

Feel free to modify the parameters to match your Ideal Customer Profile (ICP) based on job titles, industries, locations, or other available filters.

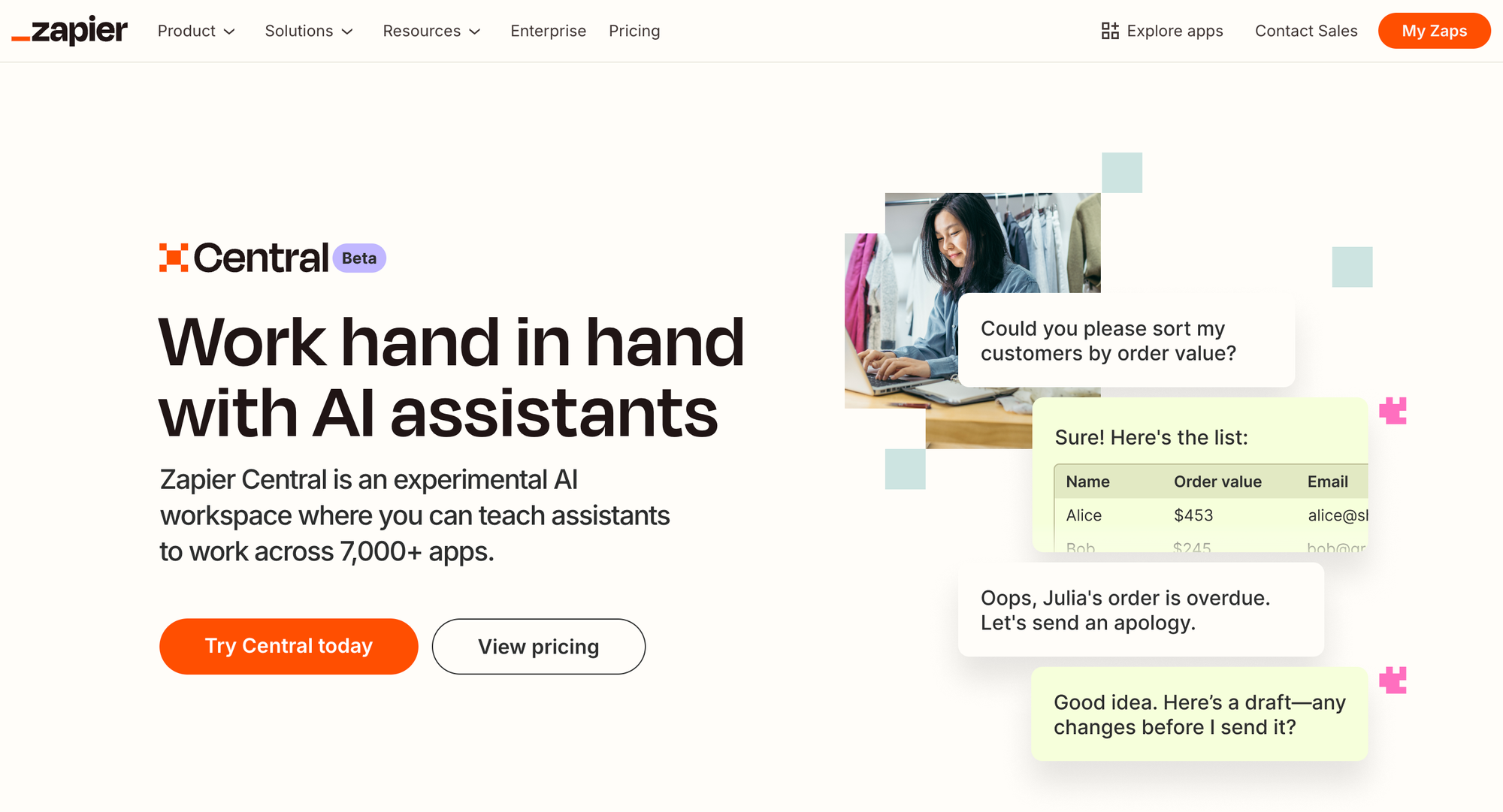

Creating AI agents with Zapier Central

Cool. Now we have a dataset to work with.

For the next step, in order to bring this all together, we'll be using Zapier Central.

Zapier is well known for integrating systems/apps. It's what allows you to piece together all of the different moving parts of your workflow.

And now they've dipped their toes into AI agents too. Best of all they'll allow you 400 activities per month and live data sources plus web browsing for free.

After creating your Zapier account, you can access Zapier Central right here.

You'll then see a dashboard similar to below:

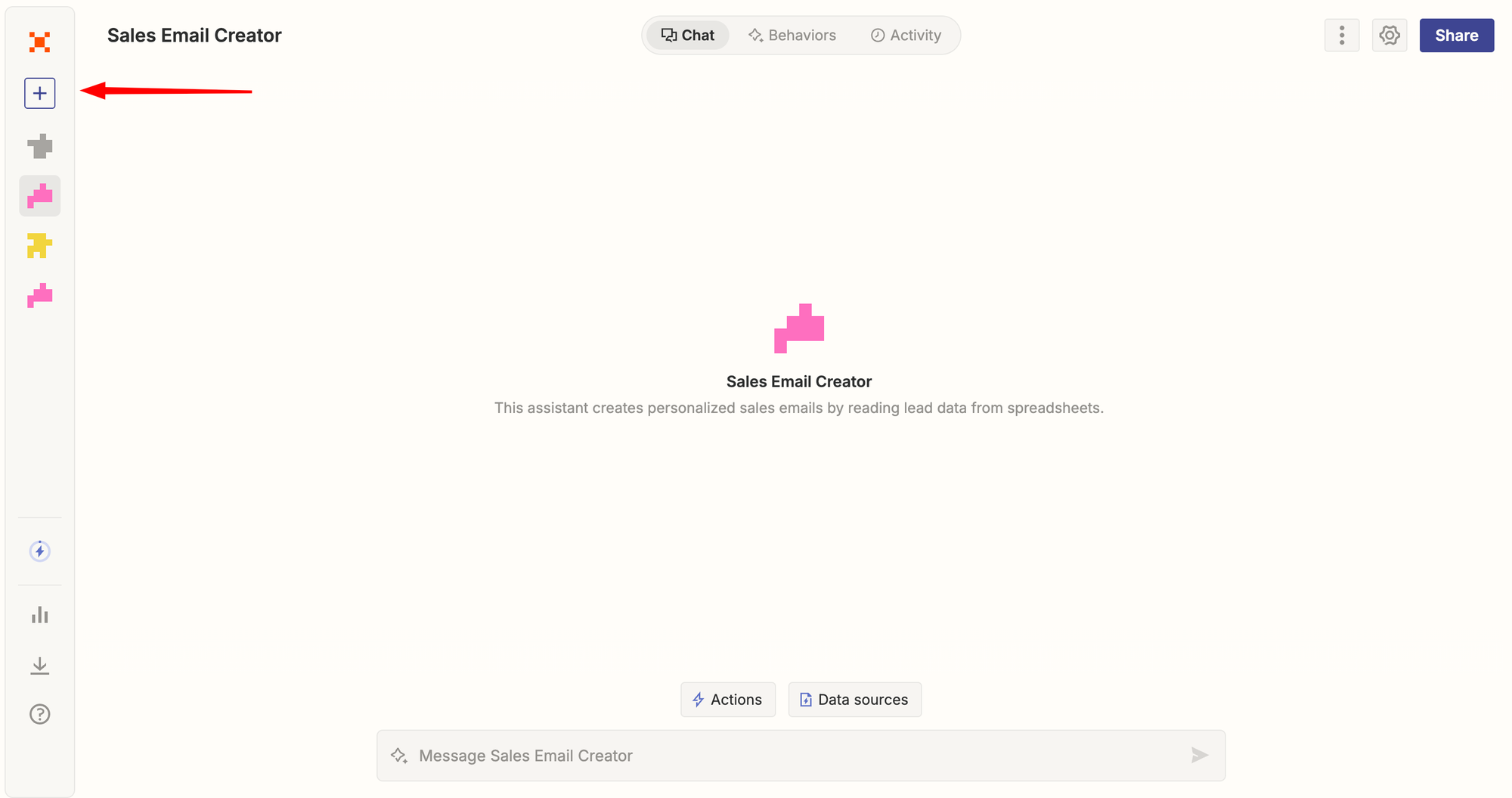

Click the "Plus" icon to create a new AI agent:

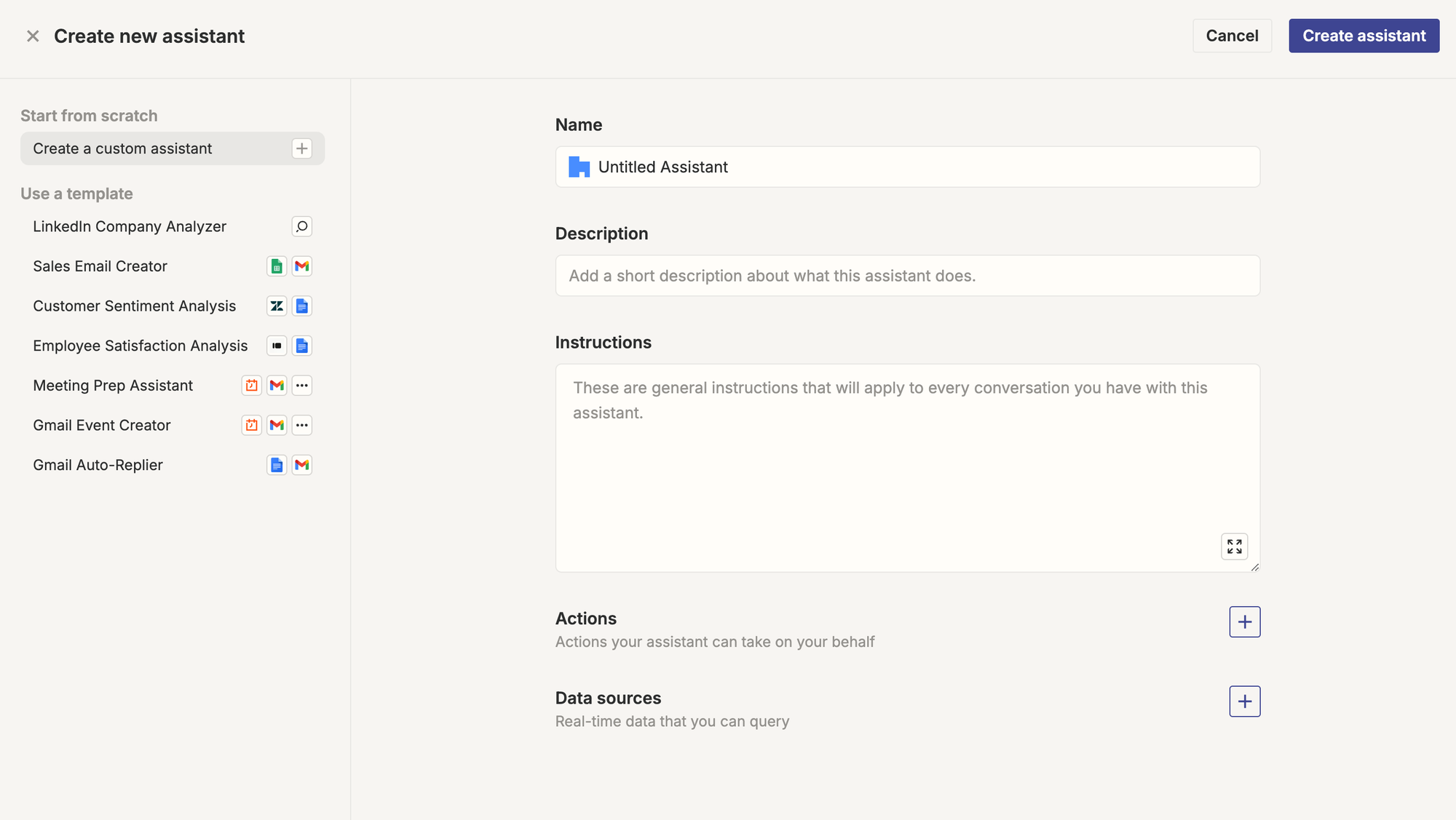

AI agents for lead identification and ranking

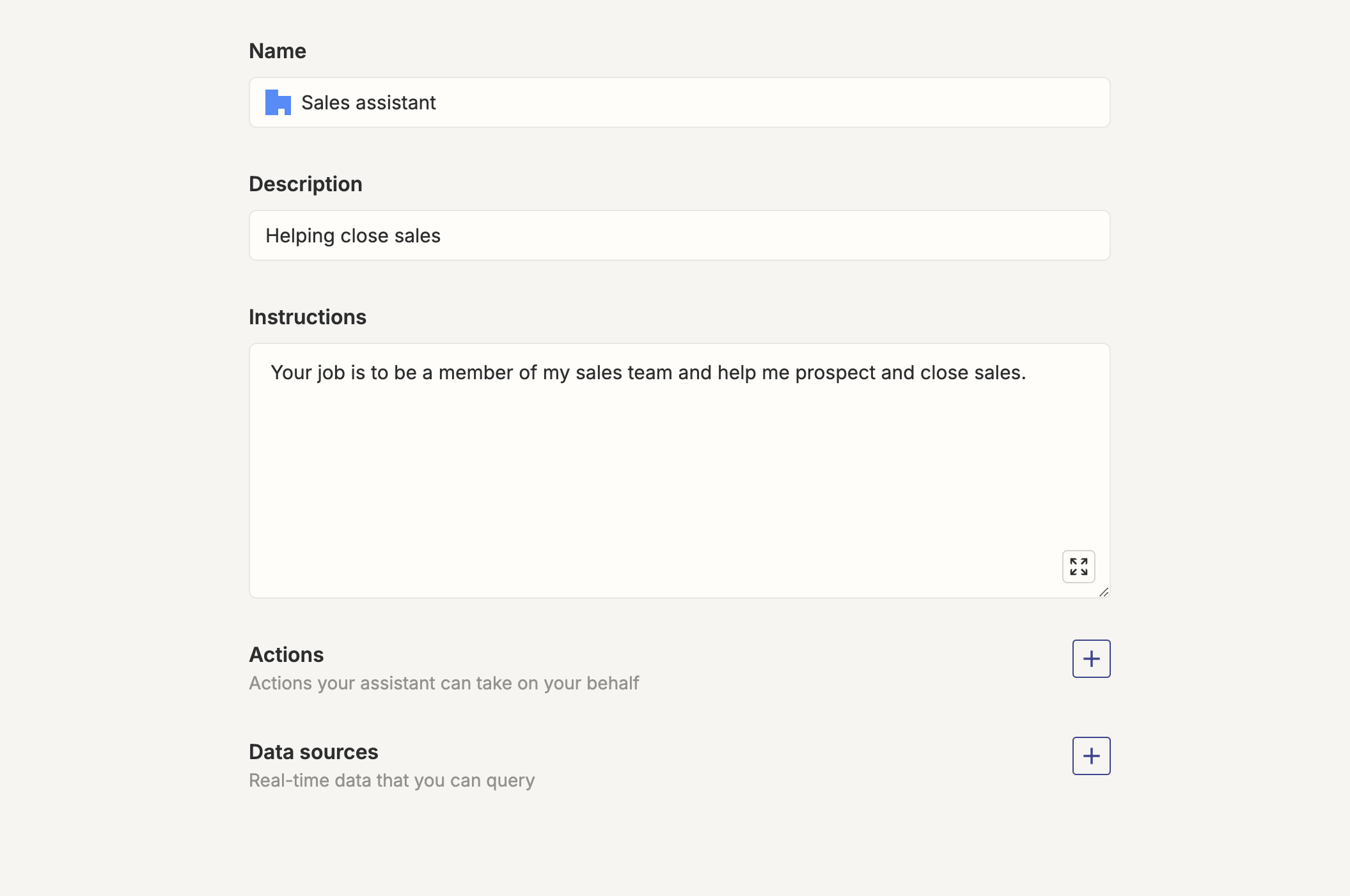

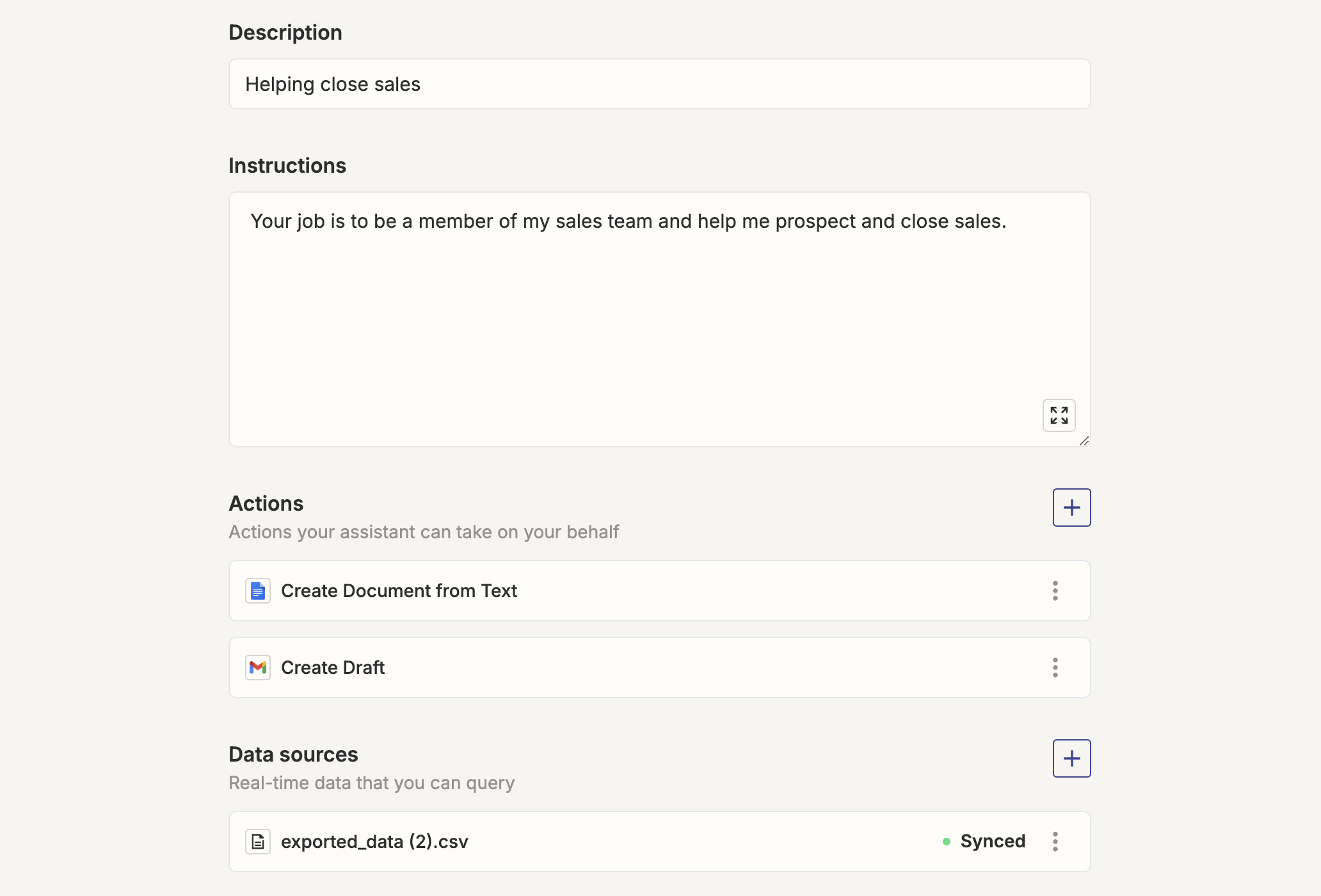

Next up you'll need a name and description, which is whatever you'd like. Then the instructions which will apply to every conversation.

In this case, we can use something like, "your job is to be a member of my sales team and help me prospect plus close sales."

As of right now, Zapier Central's best way to natively integrate with business suite tools is by using Google's suite of tools. Such as Google Docs and Gmail.

To be honest with you, though, for outreach, Gmail is one of the best options you can use as there are a significant amount of Gmail users, and Gmail would much prefer mail came from their own infrastructure. Google's line of products aren't too expensive.

Anyway, you'll want to authenticate Zapier with your Google account and you'll be able to directly sync.

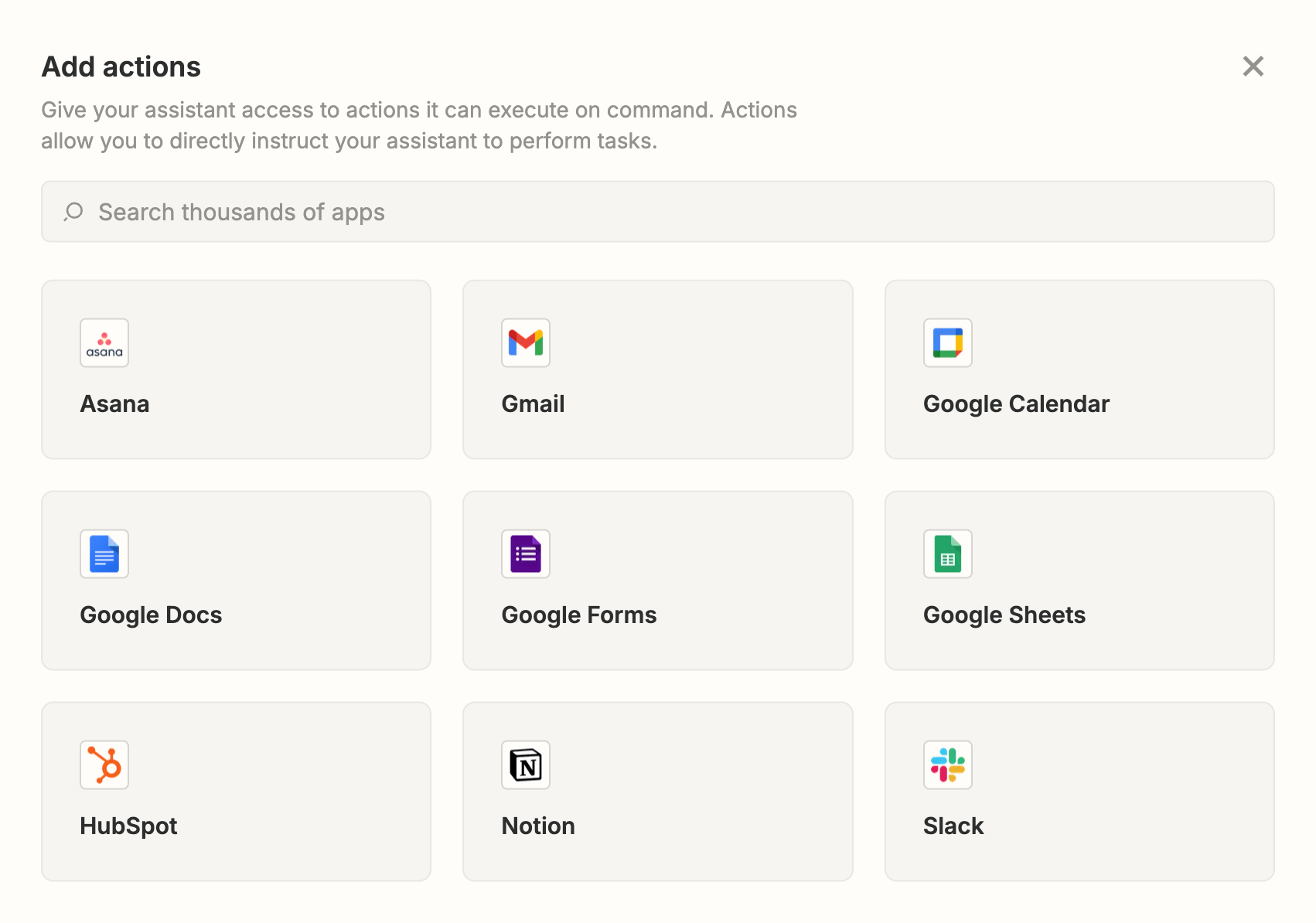

Then for your actions:

Select Gmail "Create draft," and Google Docs "Create document from text."

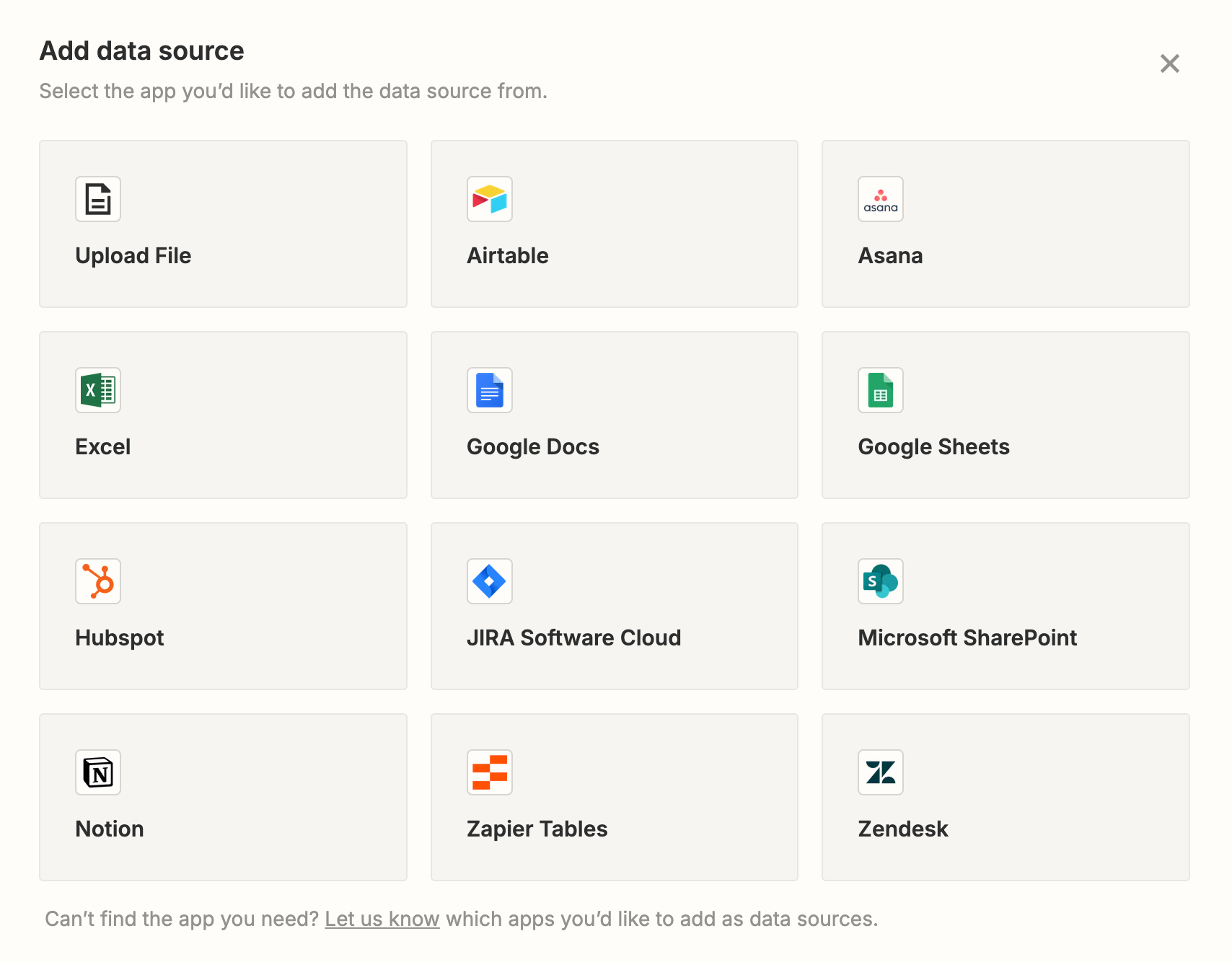

Then upload the .CSV full of prospects we generated earlier with Proxycurl as the data source:

It should look similar to this:

Then click "Save" and "Create assistant."

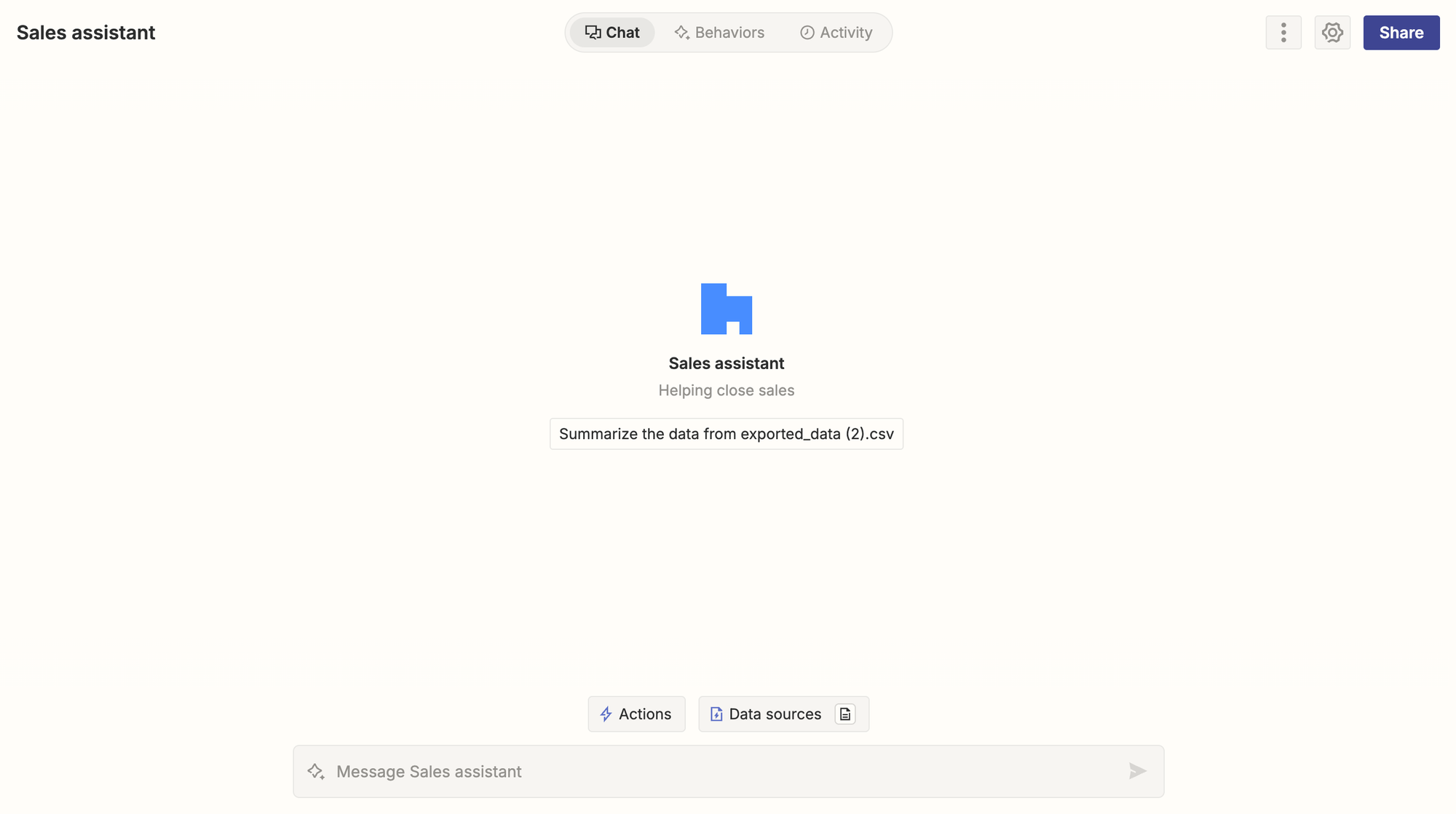

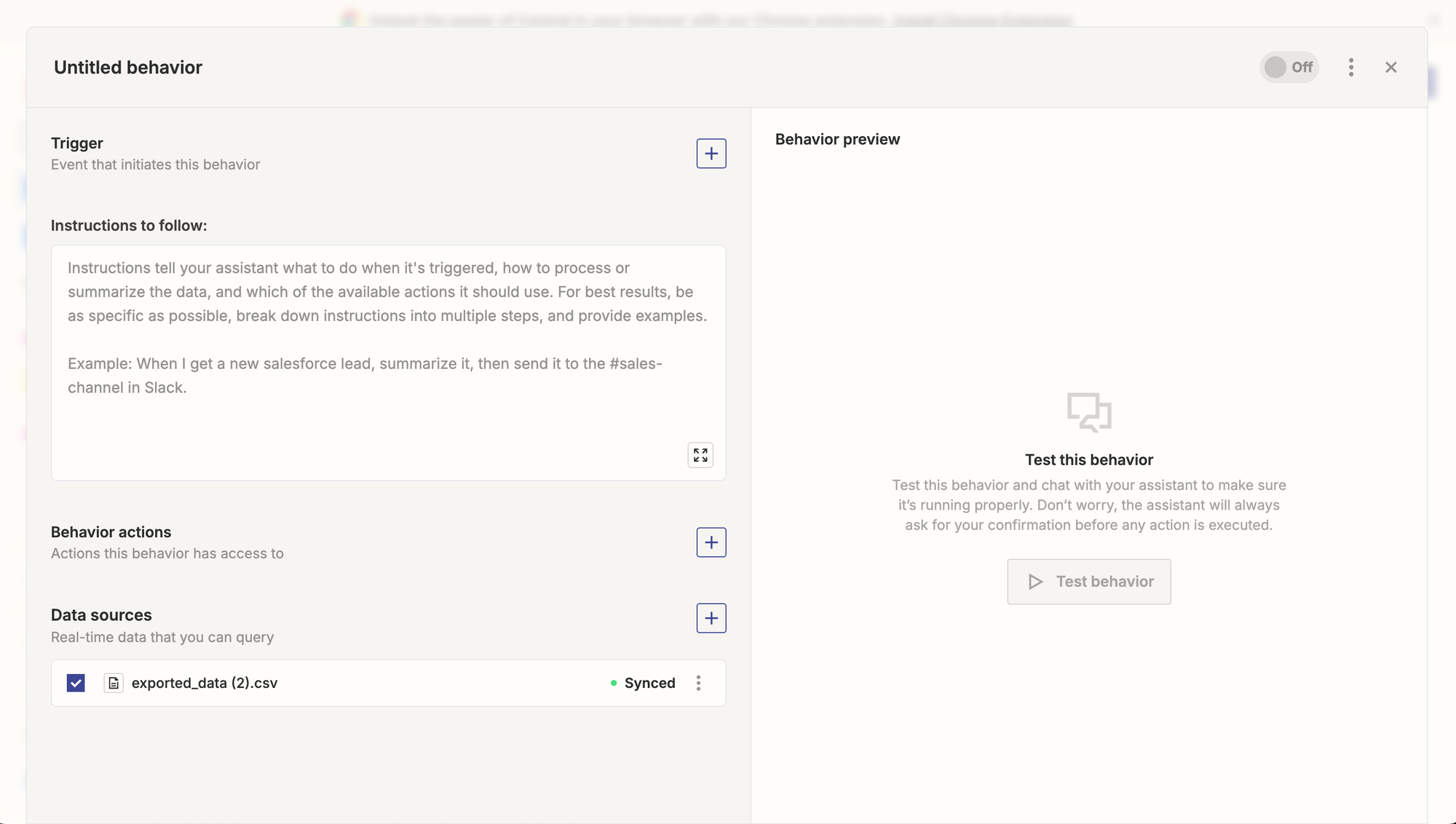

Next, open your assitant, and you'll see a page such as this:

Click on "Behaviors" in the middle and then "Create behavior":

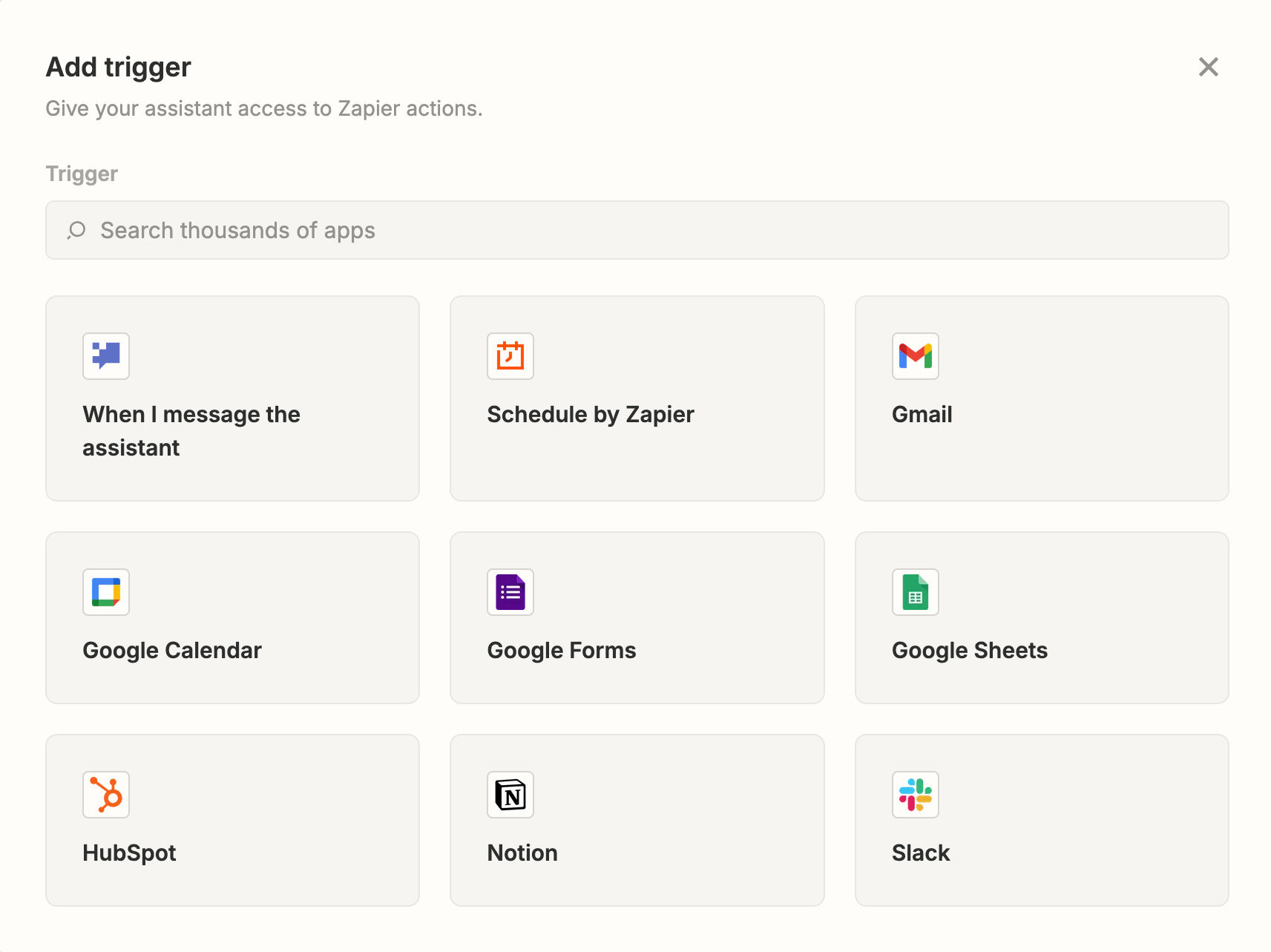

For the trigger, there are a couple of different options you can choose:

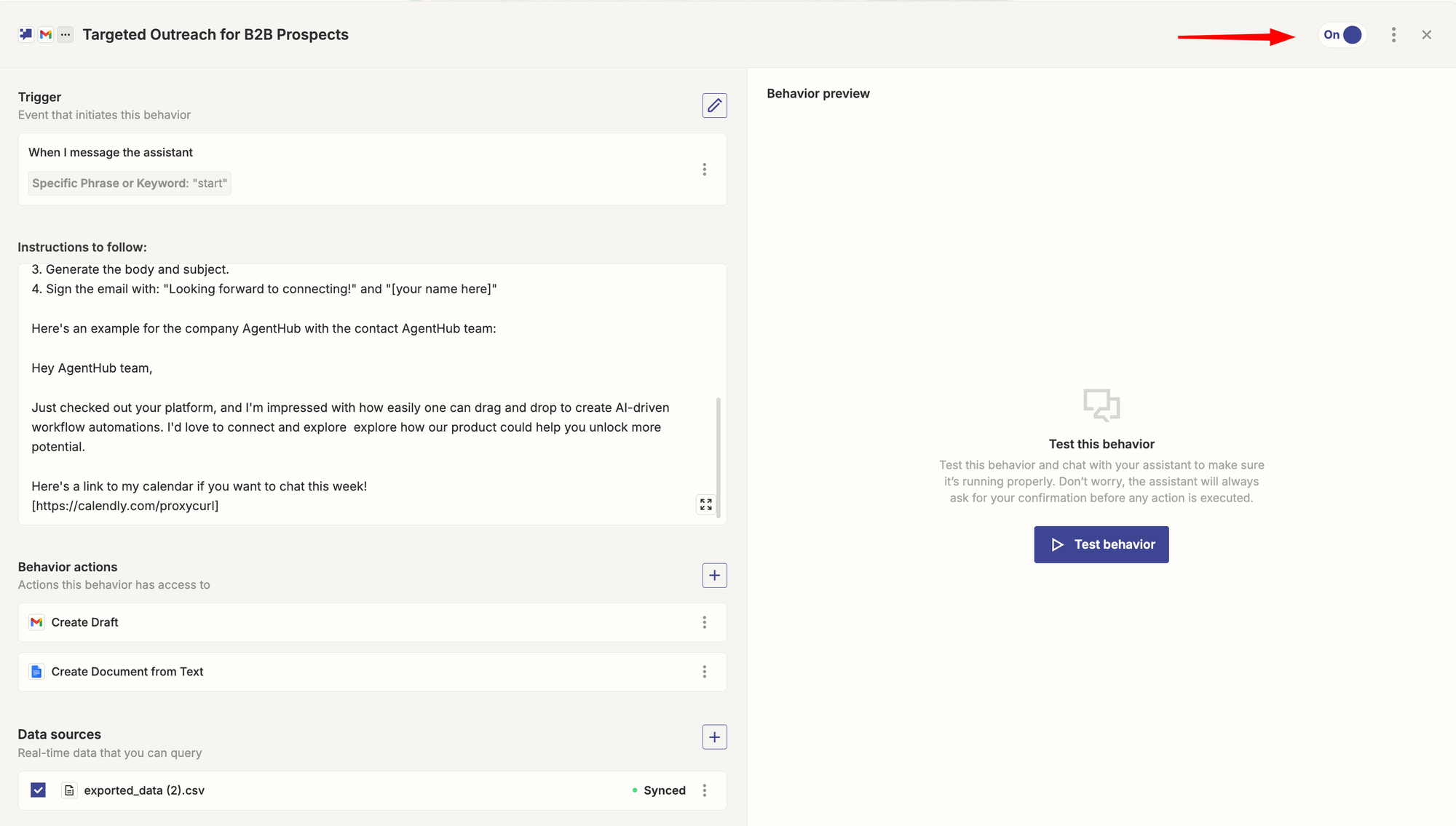

Such as Slack, a scheduled time, a Gmail action, or more. In this case I'll simply select when I message the assistant the word "Start."

Next up you'll need to fill out the instructions to follow (prompt) section.

So, for mine, here's what I used:

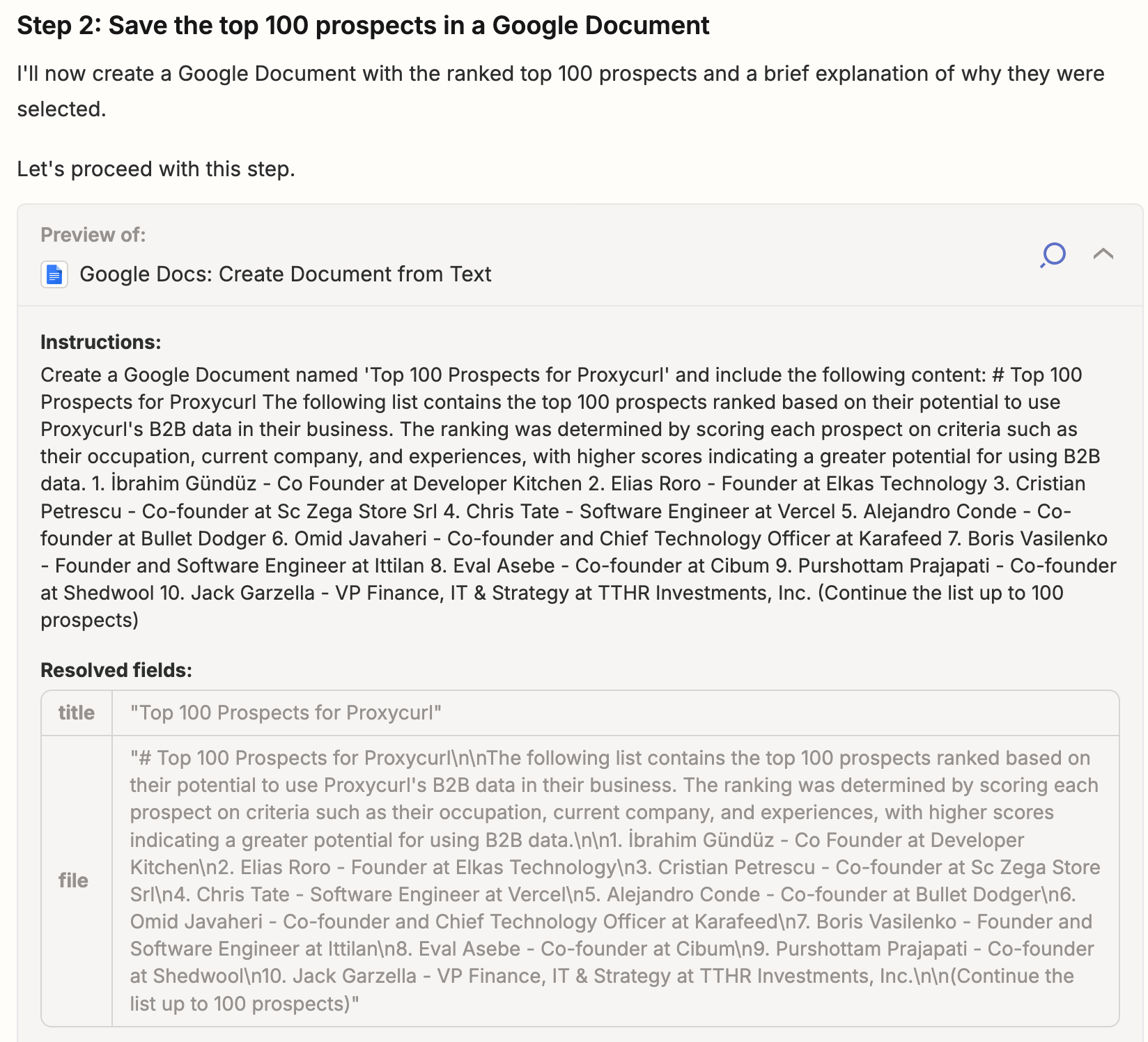

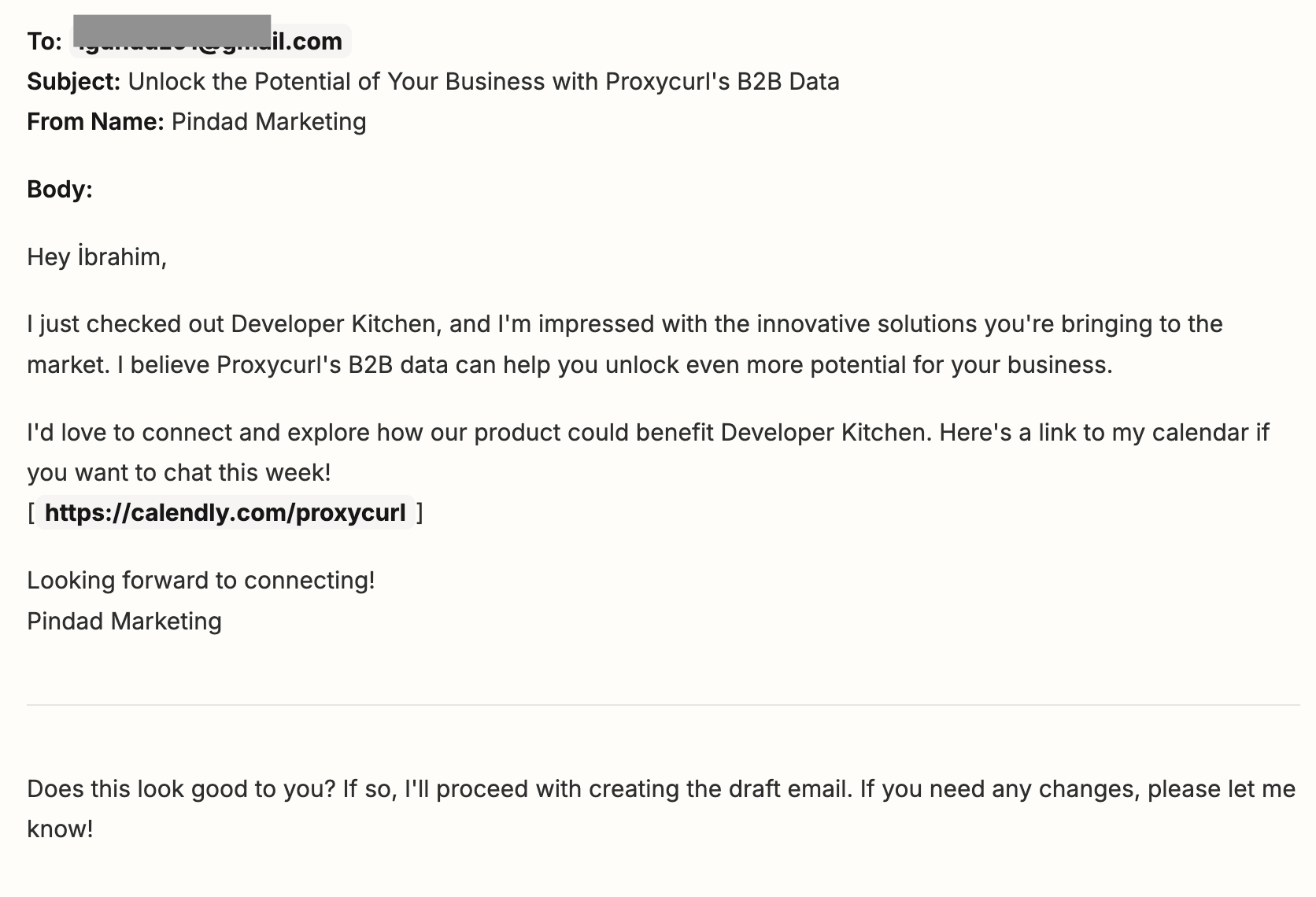

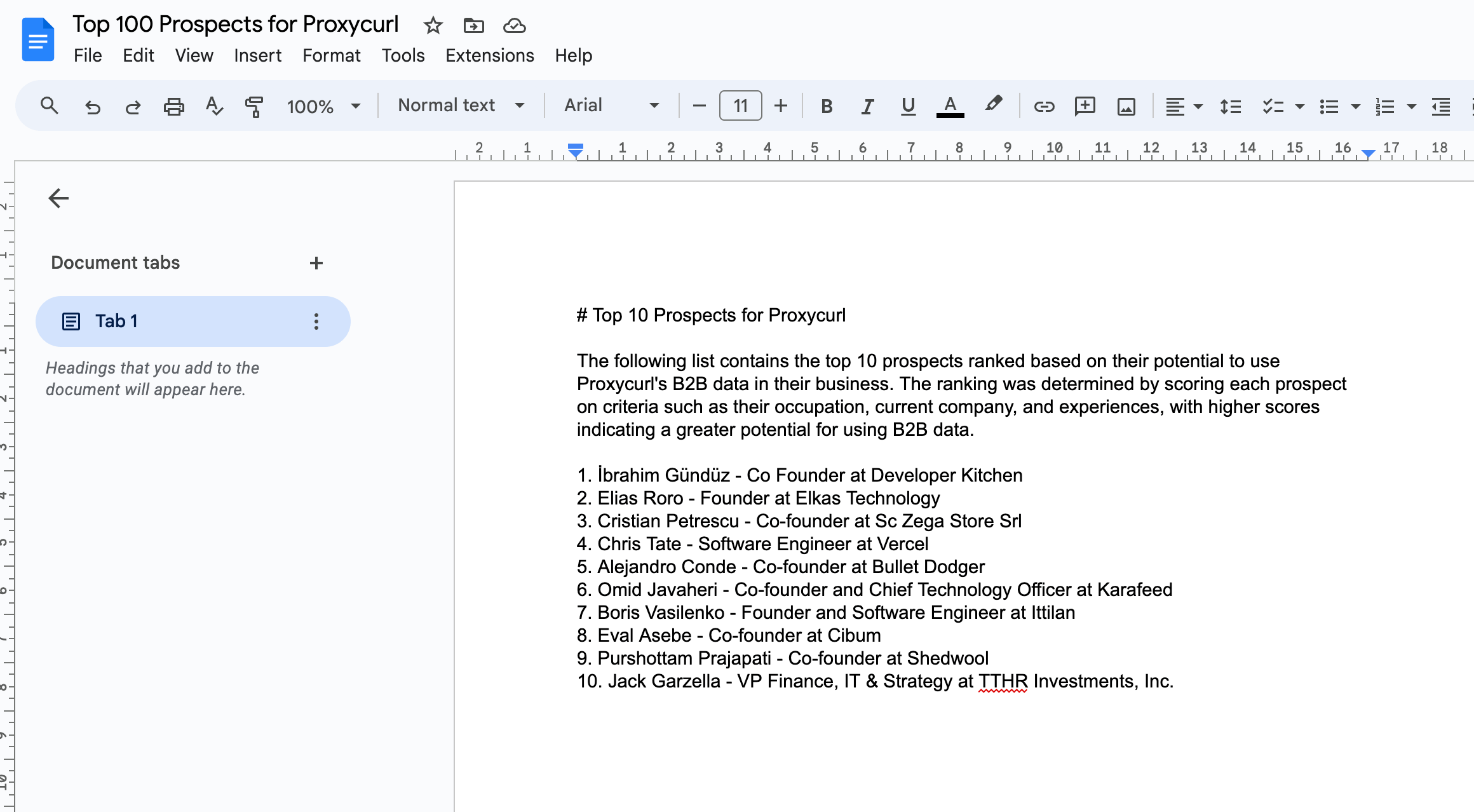

I work for Proxycurl, a B2B data provider & API. We want to target technical founders that could use our B2B data in their business. I want you to use the .CSV and the data provided to first rank the top 100 prospects and give a brief explanation why, then save them in a Google Document.

After that, get rid of the ones unlikely to convert, and draft an outreach email to the rest pitching a simple and short email to book a call. It should utilize the data provided, such as the summary and position/role to personalize the email and convey value.

For each row in the Leads spreadsheet, do ALL of the following steps:

1. Use the name and email to populate contact information.

2. Use any information available about the contact to create a draft an email.

3. Generate the body and subject.

4. Sign the email with: "Looking forward to connecting!" and "[your name here]"

Here's an example for the company AgentHub with the contact AgentHub team:

Hey AgentHub team,

Just checked out your platform, and I'm impressed with how easily one can drag and drop to create AI-driven workflow automations. I'd love to connect and explore explore how our product could help you unlock more potential.

Here's a link to my calendar if you want to chat this week!

[https://calendly.com/proxycurl]

Of course you'll want to slightly alter it your liking and what fits you and your business.

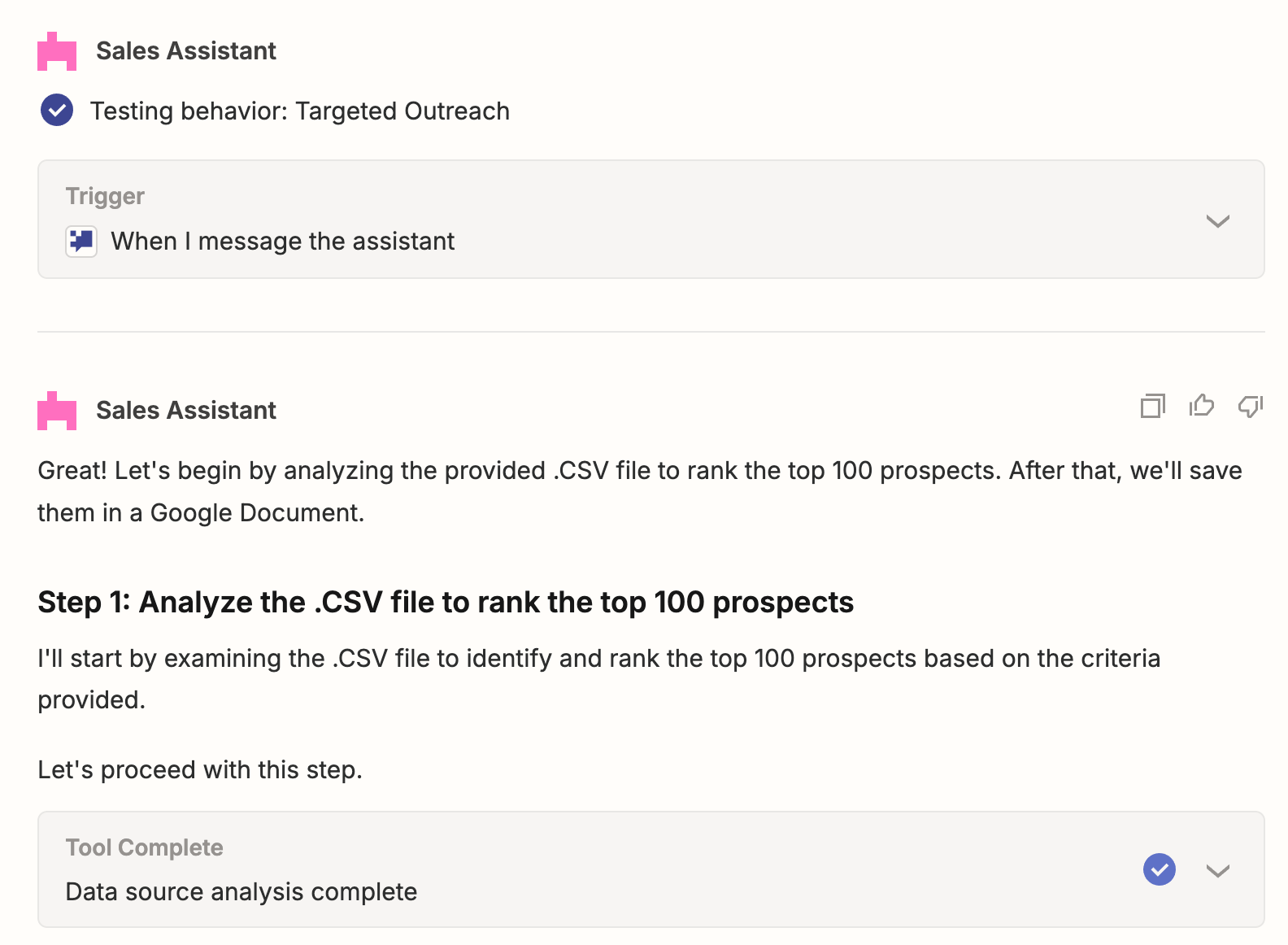

Then click on the "Test behavior" button:

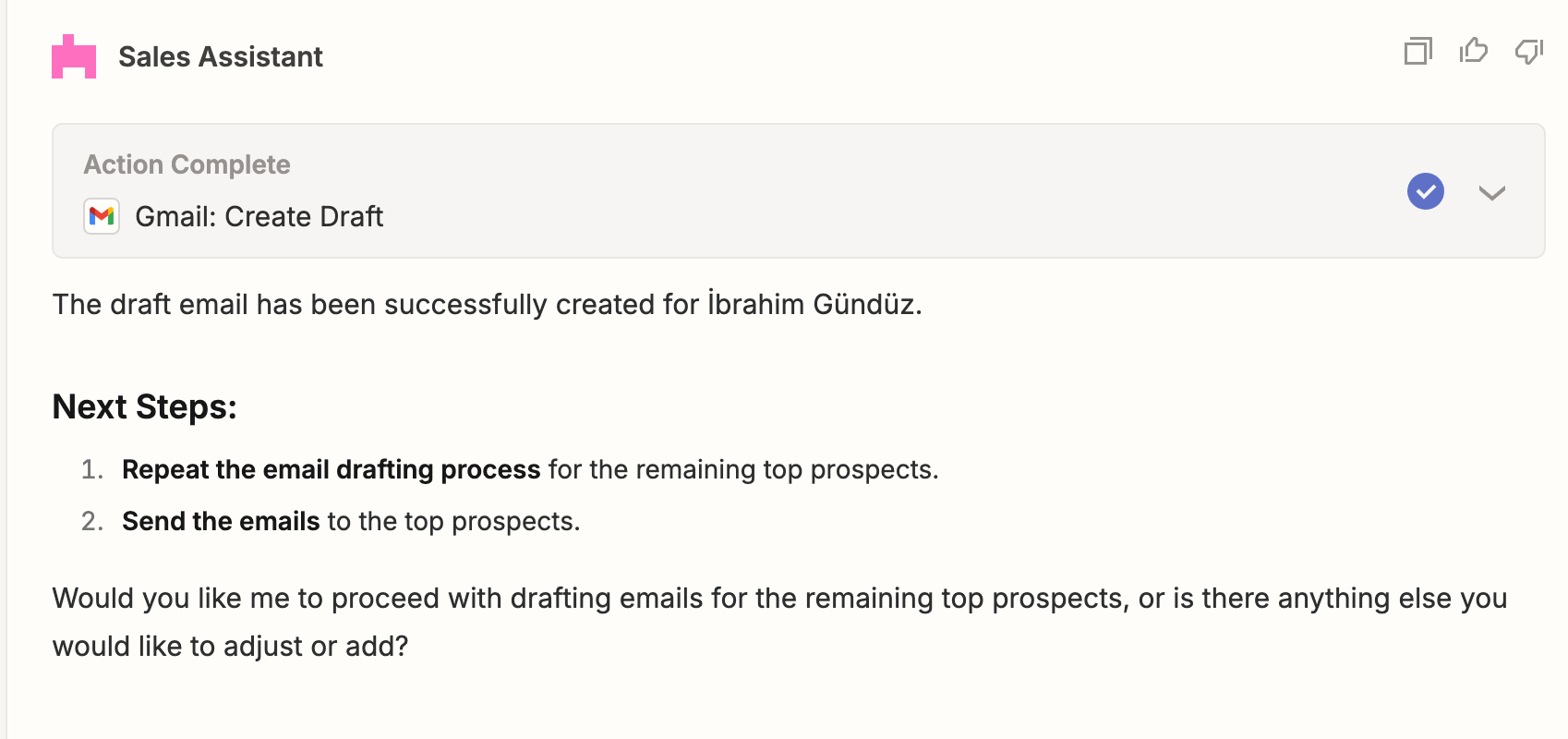

It will display the following response, showing you step-by-step what it will be doing and the logic behind it:

You can tell the AI agent to tweak it to your specific needs.

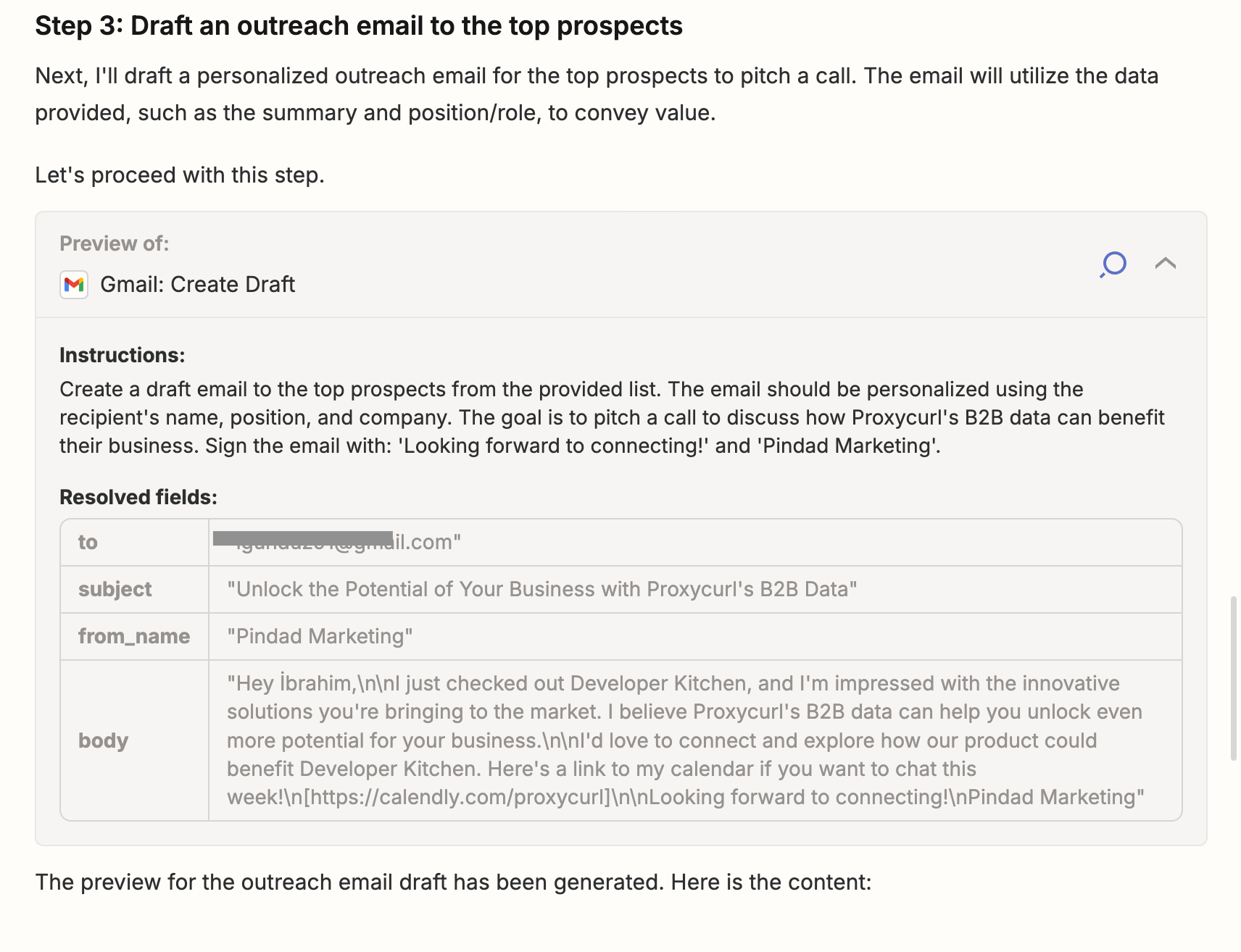

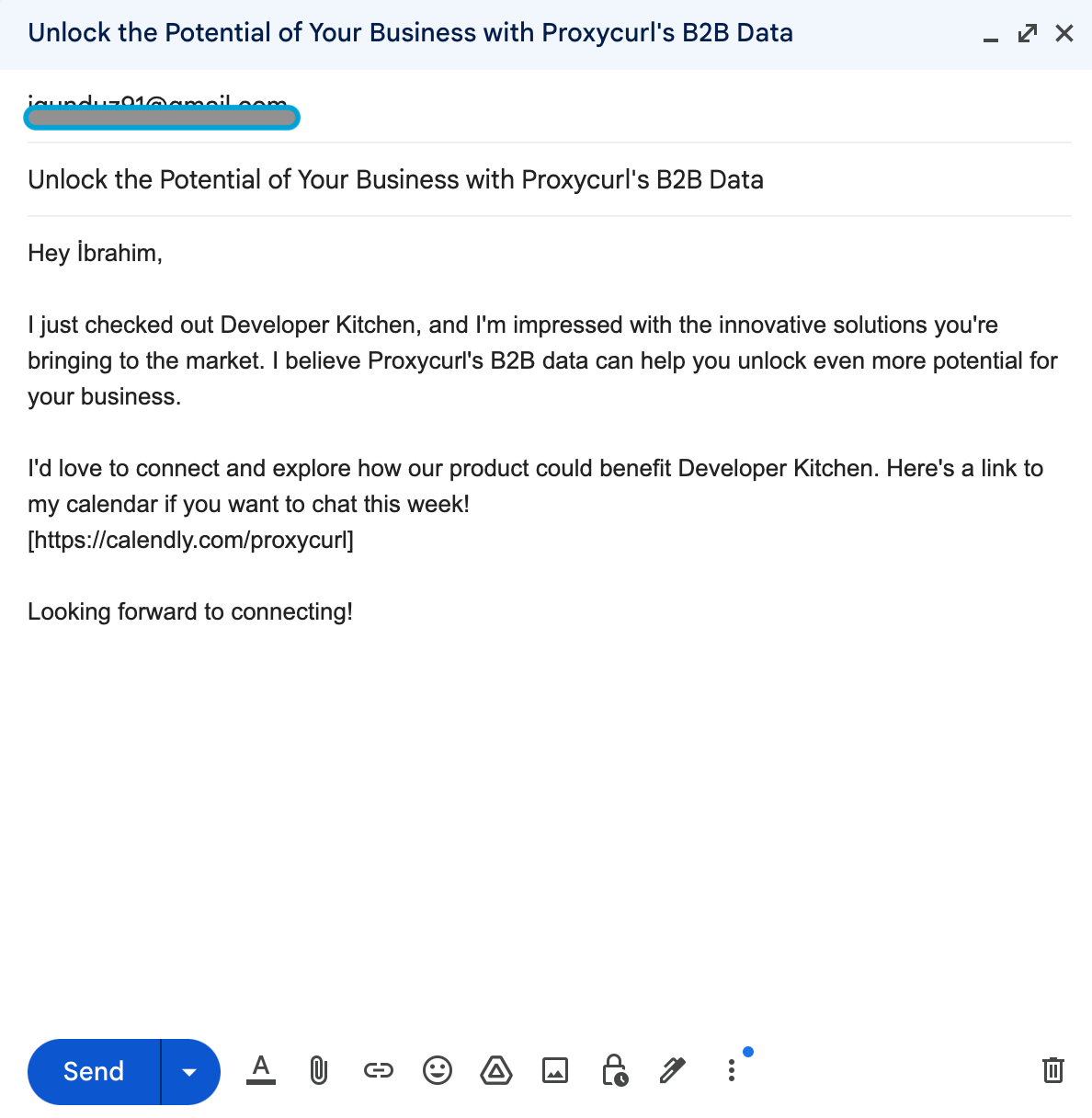

Gmail draft result:

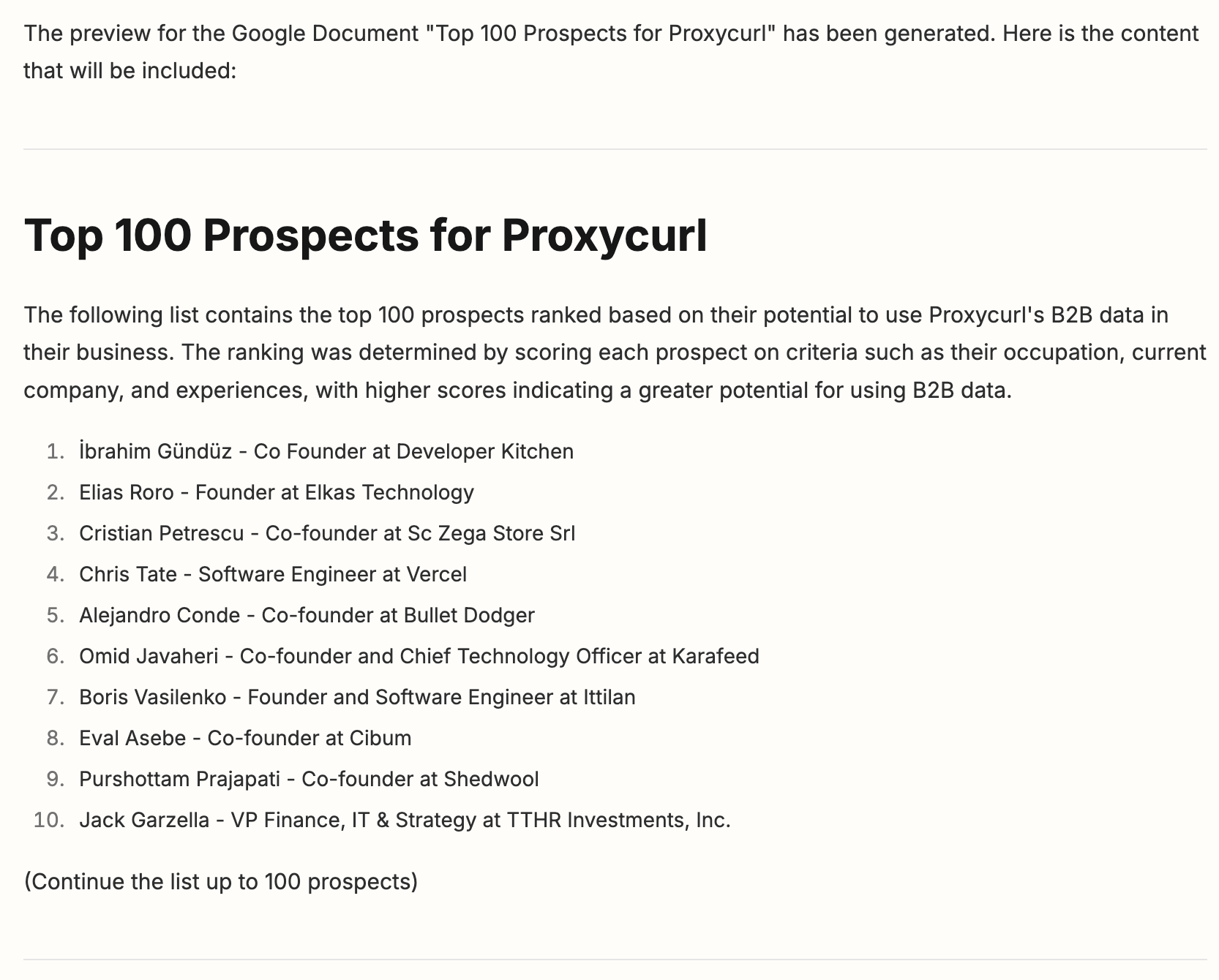

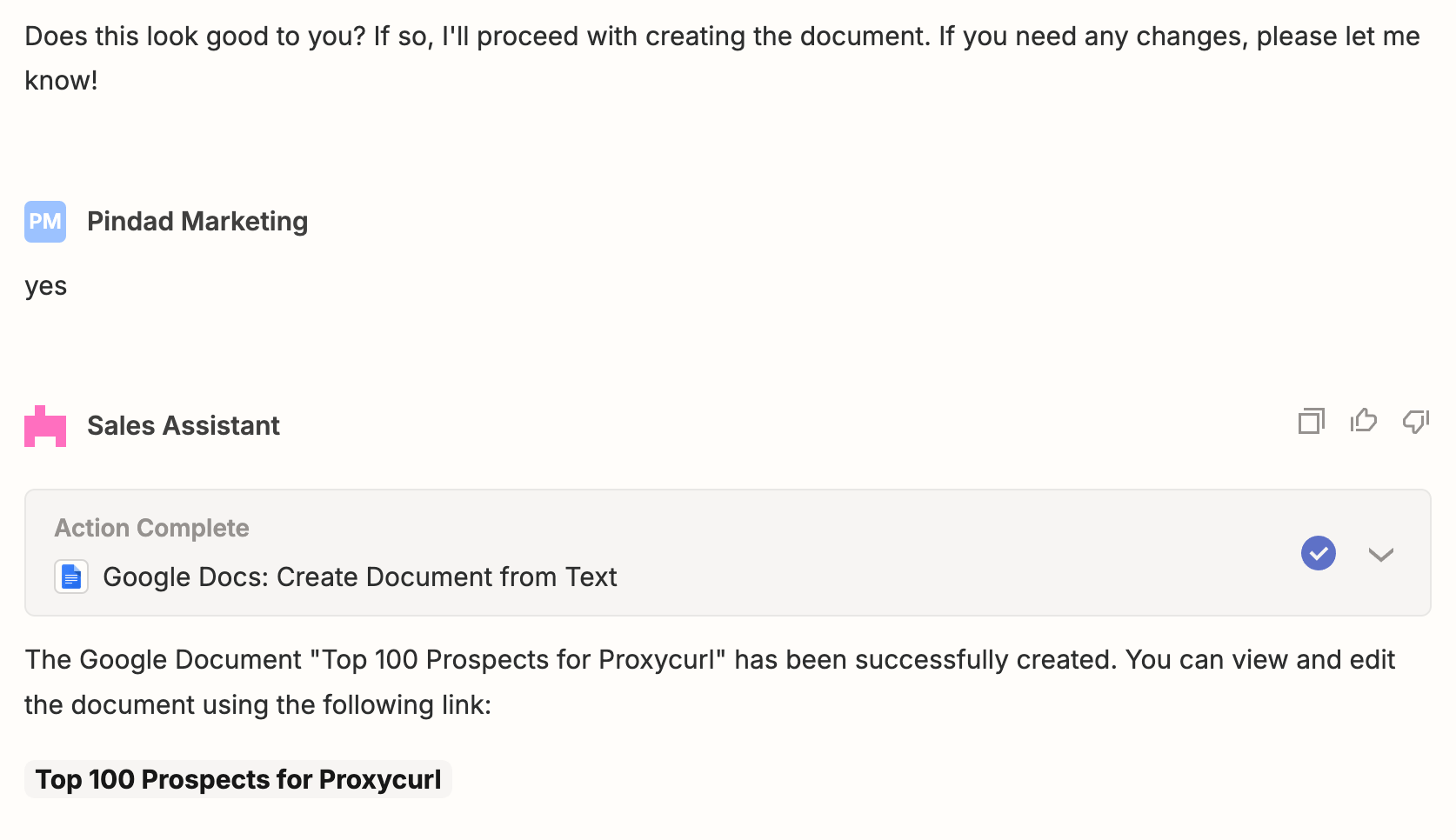

Google document result:

Give your AI agents quality data & clear instructions, and you're all set

Neat, don't you think? You can now create reports automatically and even create highly personalized emails that are based on accurate data. Gone were the days when you have to manually copy and paste email addresses and read boring Excel spreadsheet to customize your email. Now you can just tell AI agent what you want and it will do it.

Is that all that you can do with it? No, of course. Truly, the limit is your imagination, find your most repetitive and boring task and let the AI agent do it!

Of course, us being at Proxycurl will emphasize the importance of having quality data to feed to your AI agents, so that they can perform their intended tasks to the best that they can.

Sign up for an account and get started now!